ML-driven Alpha Search and Alpha Allocation on Large Scale Part I: Recent Trends in Machine Learning

Summary of the talk given at the Bloomberg Quantamental Exchange 2023

The series on the topic ‘ML-driven Alpha Search and Alpha Allocation on Large Scale’ consists of the following 3 sections.

Part 1: Recent Trends in Machine Learning

Part 2: Alpha Search on Large Scale

Part 3: Enhanced Alpha Search and Strategy Allocation on Large Scale

In this section, we will explore the recent trends in the field that are shaping the academia.

Introduction

Good afternoon, everyone!

First and foremost, I would like to thank Bloomberg for the invitation to speak to this community of qualified investment professionals and the opportunity to share my insight on the application of machine learning in finance. To give an introduction about myself, my name is Jin Chung and I am the co-founder of Akros Technologies where I oversee the overall global business and research & development process. I previously studied information engineering at the University of Oxford and worked at Intel’s OpenCV team and the Oxford Robotics Institute prior to venturing into quantitative research.

I hope you enjoy the read!

Trends in Machine Learning

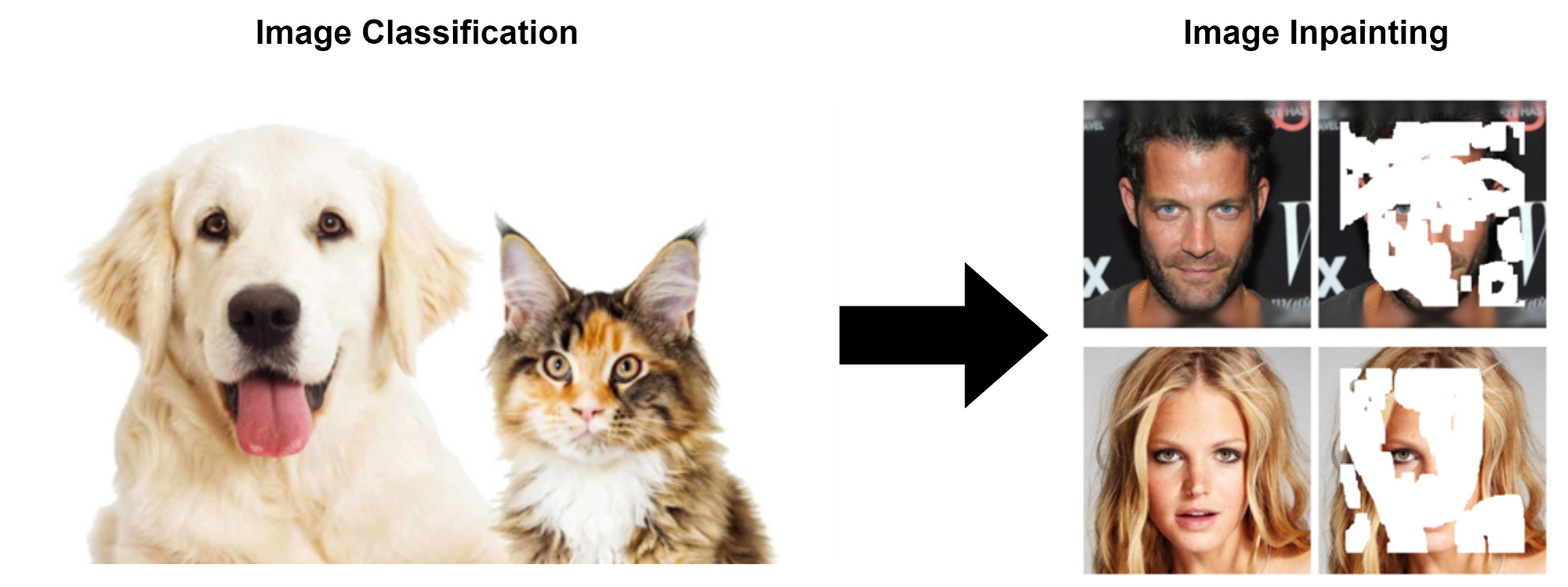

Before delving into the main topic of this talk, I would like the set the stage by discussing the current trends in machine learning that are shaping the field in academia across multiple domains. In the domain of images, the models have moved on from image classification to image inpainting. While traditional models simply categorized images into pre-defined classes or labels like dogs and cats, contemporary models can generate new images by filling in missing pixels or even creating new regions of existing images.

In the realm of video analysis, the focus of research has shifted from action recognition to video prediction. While old models identified specific activities within a video, new models go a step further to predict videos and generate plausible future frames or sequences of the video.

Recent advances in natural language processing have transitioned from sentimental analysis to language generation. While models in the past merely analyzed sentiments to label text based on its emotional tone to classes like “positive”, “negative” or “neutral”, recent models generate human-like responses to natural language prompts consistent and coherent with what is asked in different forms whether it be questions, sentences, or conversation.

In recent years we have also seen the direction of research moving from simulation to product deployment. In the past, machine learning models were often developed and evaluated in simulated environments, with perhaps less emphasis on how they might be deployed for real-world applications. While one area of research focuses on large models like LLMs trained with billions of parameters and data, more research now focuses on developing machine learning models that can be deployed as services. One example of this is DALL-E, a generative image model developed by OpenAI that creates images from textual descriptions. Another example is of course Chat-GPT 4.0 which was released only a couple of weeks ago.

Another example (perhaps different from the ones previously mentioned) is tinyML where researchers from MIT are focusing on improving the efficiency of the models that require less computation and data that still produce accurate results. These models have been designed to accept thousands of queries and produce high-quality results, rendering them practical for a wide range of real-life applications. The efforts of tech companies focusing on product deployment and small, yet accurate models are reflections of growing awareness of creating greater practical value.

From a broader perspective, we, therefore, identify two major trends. The first trend is that the direction of research is moving on from discriminative models to generative models. Whereas preliminary discriminative models are designed to predict a target based on input variables, advanced generative models now have a greater generalized ability to create new samples of data that are not necessarily identical to foreseen data. The second trend is that direction of research is advancing to product deployment with greater efficiency to enhance practical significance.

Application of Recent Developments in the Direction of Quantitative Research

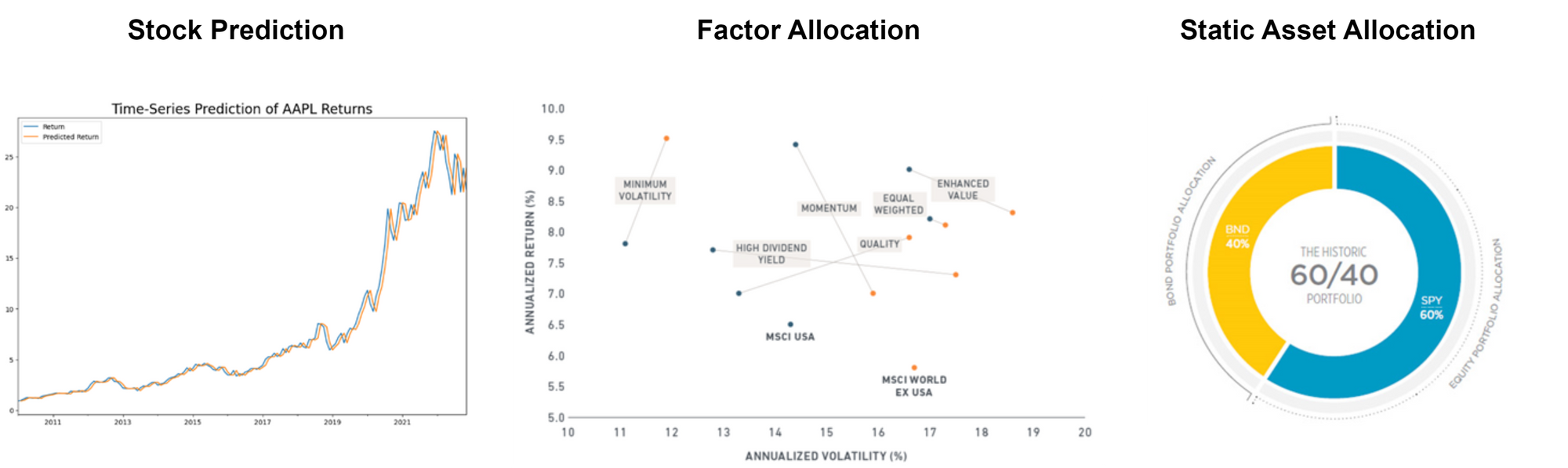

Such development in machine learning has prompted me to reconsider whether we have been asking the right research questions and subsequently taking the right direction of research. Traditionally, quantitative research has been focused on discriminative tasks such as stock prediction, where the models were asked to classify the future return of stocks, and factor allocation where the models were given the task to optimize the weight of pre-determined factors.

But given the recent development in machine learning, can we start asking ourselves new questions for us to take a different approach in quantitative research? Can we start to think questions like: is there a way to train generative models to create investment strategies on large scale?

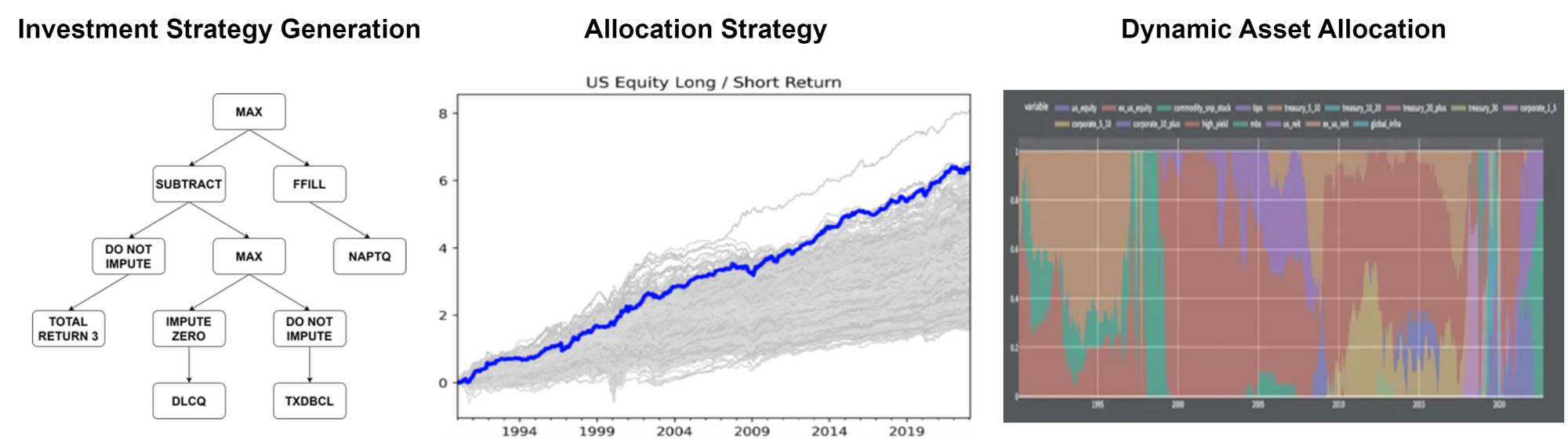

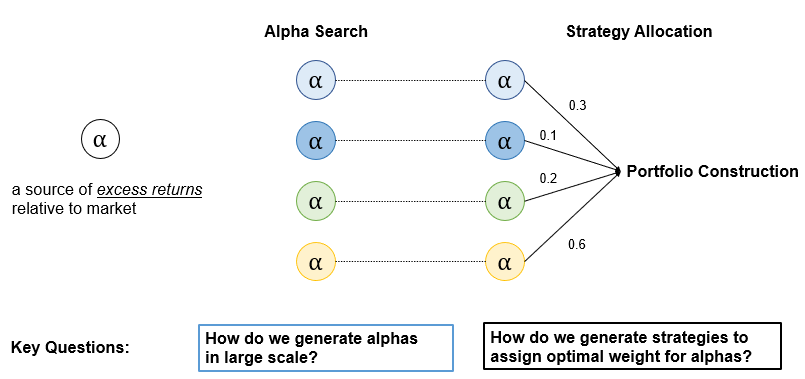

And so, I am excited to talk about the kind of research that we are conducting at Akros – ML-driven alpha search and alpha allocation on large scale. And I have essentially split this talk into two parts. One: Given that alpha is a source of excess returns relative to the market, how do we generate alphas on large scale? Two: Given the alphas that we have found, how do we generate allocation strategies?

In the subsequent sections, I will be talking about the direction that we take in generating alphas and allocating multiple strategies on large scale.

Thank you for your time and for those who are interested in learning more about Akros Technologies, you can contact us via email and here are a few links you could refer to!